Time Complexity for JavaScript Developers: What Big O is really about

Time flies O(n)

Senior dev: Your function has an exponential running time, It won't scale properly

Hi guys!

I've been coding for 4 years now and until recently, a concept that really scared me was Time complexity and Big O notation so I skipped it like most of us. I'm going to explain what time complexity really is and debunk the complexities around it.

What is Time Complexity

So, most times, we write code to solve a problem. And many times, there are several ways to solve the same problem.

Time complexity is a way to measure which solution is better by measuring how long the solutions take to run.

By definition, Time complexity is the amount of time taken by an algorithm to run, in relation to the length of the input.

What is an Algorithm?

An Algorithm, in computer programming, is a finite sequence of well-defined instructions, typically executed in a computer, to solve a class of problems or to perform a common task.

Why don't we just record the time it takes for the algorithm to run?

Simply because, The time an algorithm takes to run is dependent on, the programming language, Processing power of the machine e.t.c

How do we record the Time Complexity then?

Using Big O notation.

Big O is simply a way to record how an algorithm would perform in its worst case scenario.

Big O Notation expresses the run time of an algorithm in terms of

- how quickly it grows relative to the input (denoted by n)

- the N number of operations that are done on it.

Why is it important

- It helps makes better choices since, nobody would want to use a slow service.

- Using the wrong algorithm can make you pay for unnecessary computational power on servers.

- It gives us a means to express how fast an algorithm is without writing the entire algorithm.

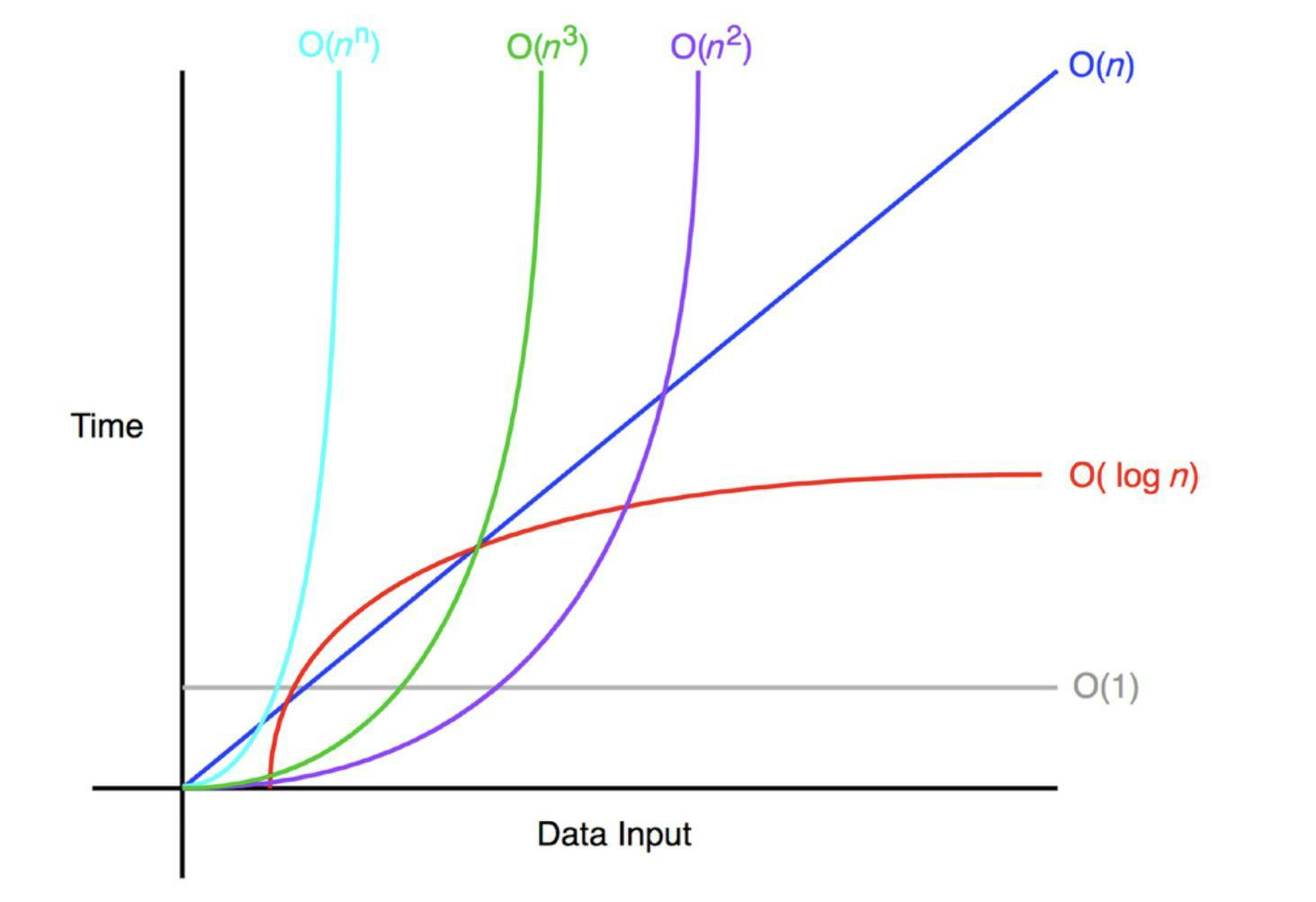

Types of Time Complexities

There are different types of time complexities used, let’s see one by one:

Constant time – O (1):

In Constant time, the speed of the algorithm doesn't vary with the number of inputs.

The speed remains the same no matter what.

Example:

The perfect example is a function that returns the first item of an array or list

No matter the size of the array, the number of operations doesn't change!

function getFirstItem(arr) {

let firstItem = arr[0];

return firstItem;

}

Linear time – O (n):

In linear time, the speed is inversely proportional to the number of inputs.

So, as the number of inputs increase, so does the number of operations.

Example:

Finding an item in an array with linear search.

As the number of items in the array increase, the number of steps to take increases also.

Let's say we have three steps:

- Pick an item

- Check If its what we are looking for.

- Return the answer or go to the next one.

function search(arr, n) {

for (let i = 0; i < arr.length; i++) {

if(arr[i] === n) {

return arr[i]

}

}

If we have 2 items, we have to do them twice, that's 2 3 = 6 operations.

If we have 4 items, we have to do them twice, that's 4 3 = 12 operations.

If we have 10 items, we have to do them twice, that's 10 * 3 = 60 operations.

You might be thinking, What if you find the element on the first operation (Best case scenario)?

Well remember, Big O notation represents the worst case scenario.

Logarithmic time – O (log n):

An algorithm with logarithmic time is an algorithm that reduces the size of the input in each operation so, as the input size increases the needed operations reduce.

Example:

A binary search algorithm

Binary Search is a searching algorithm used in a sorted array by repeatedly dividing the search interval in half. The idea of binary search is to use the information that the array is sorted and reduce the number of operations necessary.

function binarySearch(array, n, offset = 0) {

// split array in half

const half = Math.round(array.length / 2);

const current = array[half];

if(current === n) {

return offset + half;

} else if(n > current) {

const right = array.slice(half);

return binarySearch(right, n, offset + half);

} else {

const left = array.slice(0, half)

return binarySearch(left, n, offset);

}

}

Linearithmic time O (n log n)

Linearithmic time complexity it’s slightly slower than a linear algorithm.

The perfect example is a merge sort.

A merge sort takes two unsorted arrays and merges them into a single sorted one.

function merge(left, right) {

let sortedArr = []; // the sorted elements will go here

while (left.length && right.length) {

// insert the smallest element to the sortedArr

if (left[0] < right[0]) {

sortedArr.push(left.shift());

} else {

sortedArr.push(right.shift());

}

}

}

Quadratic time – O (n^2)

When an algorithm executes in Quadratic time, it slows down radically as the length of the input increases.

Let say we have an input of length 2 in a quadratic time algorithm.

The number of operations becomes 2 ^ 2 = 4.

If we raise to 3, it becomes 3 ^ 2 = 9.

For 4, 4 ^ 2 = 12.

An example of a quadratic time algorithm.

Would be checking an array for duplicates

function hasDuplicates(arr) {

for (let outer = 0; outer < arr.length; outer++) {

for (let inner = 0; inner < arr.length; inner++) {

if(outer === inner) continue;

if(arr[outer] === arr[inner]) {

return true;

}

}

}

return false;

}

Quadratic time is a type of polynomial time.

There's also

- Cubic time – O (n^3)

- Quartic time – O (n^3)

- and Quintic time – O (n^5)

Exponential time – O (2^n)

Exponential time means that the operations performed by the algorithm double every time as the input grows.

Sounds really bad but it's really useful for many problems.

One example is a program that generates a fibonacci sequence.

Fibonacci numbers are the numbers in the following integer sequence.

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, ... and so on.

function fibonacci(n) {

if (n <= 1)

return n;

return fibonacci(n-1) + fibonacci(n-2);

}

Factorial time – O (n!)

Factorial (!) is a multiple of all the numbers less than or equal to (except 0) the given number.

For example,

- 3! = 3 * 2 * 1 = 6

- 4! = 4 * 3 * 2 * 1 = 20

- 5! = 5 * 4 * 3 * 2 * 1 = 120

- 10! = 10 * 9 * 8 * 7 * 6 * 5 * 4 * 3 * 2 * 1 = 3628800

As you can see, it quickly gets out of hand.

You're probably thinking, Why do we need such a bad algorithm?

It's because, Many real life problems don't have easy solutions. We have to get dirty.

One example is the Traveling Salesman Problem

The traveling is a simple thought problem.

Imagine you had to travel to 3 cites (Ikeja, Ikorodu, Lekki) and you want to know the shortest path.

One way to solve this you have to calculate every possible route combination. (Bruteforce)

Ikeja to Ikorodu to Lekki

Ikorodu to Lekki to Ikeja

Lekki to Ikeja to Ikorodu

Lekki to Ikorodu to Ikeja

Ikorodu to Ikeja to Lekki

Ikeja to Lekki to Ikorodu

This is all the combinations possible.

If there was one more city, it'll be 24 possible combinations as we've seen earlier.

Conclusion

So far, we have seen the good, the bad and the really bad. Despite the Time Complexity, every algo has a use case. But we should careful and use only what is necessary.

Thanks for reading!